One of the characteristics of the information age is the pervasiveness of data. Whether it’s estimated delivery dates for your items or information on how much time you spend on your phones, you use data every day to inform your decisions and set goals.

On a bigger scale, organizations use data in the same way. They have information on clients, staff, goods, and services, all of which must be standardized and dispersed throughout diverse groups and systems. Even outside partners and vendors may be given access to this information.

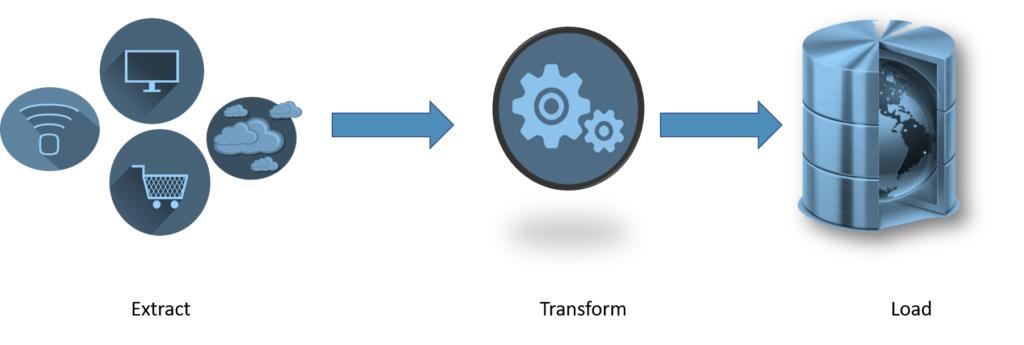

Organizations use the extract, transform, and load (ETL) practice to prepare, pass, and store data between systems to enable this highly scaled information sharing and prevent data silos. ETL technologies can standardize and scale data pipelines for the massive amounts of data that businesses handle across all of their business activities.

Why ETL Tools?

ETL tools are made to enable ETL procedures, which include extracting data from many sources, cleaning it up for consistency and quality, and storing it all together in data warehouses. When used appropriately, ETL technologies offer a consistent approach to data intake, sharing, and storage, simplifying data management techniques and enhancing data quality.

ETL tools assist platforms and organizations that are data-driven. For instance, the primary benefit of customer-relationship management (CRM) platforms is that all business operations are carried out using the same user interface. To provide a more comprehensive picture of business performance and forward progress toward objectives, this enables CRM data to be easily shared between teams.

Enterprise Software ETL Tools

Software for businesses Commercial organizations design and maintain ETL tools. Since these businesses were the first to advocate for ETL tools, their solutions typically have the most stability and maturity in the market. This involves providing graphical user interfaces (GUIs) for designing ETL pipelines, support for most relational and non-relational databases, and thorough documentation and user communities. Though they offer more functionality, enterprise software ETL tools will typically have a larger price tag and require more employee training and integration services to onboard due to their complexity

Open-Source ETL Tools

The emergence of open-source ETL solutions is not surprising, given the growth of the open-source movement. The majority of ETL solutions available today are free and provide GUIs for creating data-sharing processes and observing the information flow.

Open-source solutions have a particular advantage in that businesses can access the source code to examine the tool’s architecture and expand features. However, as they are frequently not backed by for-profit corporations, open-source ETL technologies can differ in terms of maintenance, documentation, usability, and capability.

Cloud-Based ETL Tools

As a result of the growing adoption of cloud and integration platform-as-a-service technologies, cloud service providers (CSPs) now provide ETL tools built on their infrastructure.

The effectiveness of cloud-based ETL tools is a particular benefit. High latency, availability, and flexibility are provided by cloud technology, allowing computing resources to scale to match the current demands for data processing. The pipeline is further streamlined because all operations occur within a shared infrastructure if the organization uses the same CSP to store its data.

The limitation of cloud-based ETL tools to the CSP’s environment is negative. Without initially being moved onto the provider’s cloud storage, they do not support data stored in other clouds or on-premise data centers.

Businesses with development resources can use standard programming languages to create bespoke ETL tools. This approach’s main benefit is the ability to develop a solution specifically tailored to the organization’s priorities and workflows. SQL, Python, and Java are popular programming languages for creating ETL solutions.

The biggest disadvantage of this strategy is the number of internal resources needed to develop a unique ETL tool, including testing, maintenance, and updates. The onboarding of brand-new users and developers, who will all be unfamiliar with the platform, requires further thought.

Now that you are familiar with ETL tools and the many tool categories let’s look at how to assess these options to find the best fit for your firm’s data processes and use cases.

How to Assess ETL Tool

Every firm has a distinctive business model and culture, and these characteristics will be reflected in the data that a company gathers and cherishes. However, there are universal standards that you may use to evaluate ETL tools that apply to every organization.

These standards are listed below:

Use case

A use case ETL tool must take use cases into account carefully. You might not need a robust solution as large enterprises with complex datasets do if your organization is tiny or if your data analysis needs are not as severe.

Budget

Another crucial aspect to consider while assessing ETL software is the budget. Although open-source software is often free to use, it may not have all the features or support of enterprise-grade software. The amount of resources needed is another factor. If the software requires a lot of coding, employ and retain developers.

Capabilities

The greatest ETL solutions allow you to tailor them to various teams’ and business processes’ specific data requirements. ETL technologies can enforce data quality and save the amount of labor needed to examine datasets by automating features like de-duplication. Additionally, data linkages make platform sharing easier.

Data Sources

Whether on-premises or in the cloud, ETL technologies should be able to meet data “where it dwells.” An ETL tool’s components that create links to data sources are known as ETL connectors. Additionally, organizations may have complex data structures or unstructured data in various formats. Information extraction from all sources and storage in standardized formats are characteristics of the ideal solution.

Technical literacy

It’s important to take into account how well developers and end users can work with data and code. The development team should ideally employ the languages the product was created on, for instance, if it involves manual coding. A tool that automates this procedure would be useful if the user is unfamiliar with building complex queries.

Next, let’s examine tools to power your ETL pipelines and group them by the abovementioned types.

ETL Tools

- Integrate.io

- IBM DataStage

- Oracle Data Integrator

- Fivetran

- SAS Data Management

- Talend Open Studio

- Pentaho Data Integration

- Singer

- Hadoop

- Data do

- AWS Glue

- Azure Data Factory

- Google Cloud Dataflow

- Stitch

- Informatica PowerCenter

- Skyvia

Integrate.io

Price: Free 14-day trial & flexible paid plans available

Type: Cloud

With hundreds of connectors and a full offering (ETL, ELT, API Generation, Observability, Data Warehouse Insights), Integrate.io is a market-leading low-code data integration platform that enables users to create and manage automated, secure pipelines quickly. Get regularly updated data to support the delivery of data-backed, actionable insights to help you cut your CAC, boost your ROAS, and promote go-to-market success.

The platform allows you to effortlessly aggregate data to warehouses, databases, data storage, and operational systems while being extremely scalable with any data volume or use case.

IBM DataStage

Price: Free trial with paid plans available

Type: Enterprise

Using a client-server architecture, IBM DataStage is a tool for data integration. Tasks are created and executed against a central data repository on a server from a Windows client. The tool supports extract, load, and transform (ELT) models and ETL. It supports data integrations from various sources and applications while still performing well.

DataStage for IBM Cloud Pak for Data is a version of IBM DataStage that supports cloud deployment and was created for on-premise deployment.

Oracle Data Integrator

Price: Pricing available on request

Type: Enterprise

Building, managing, and maintaining data integration workflows across businesses is the goal of the Oracle Data Integrator (ODI) technology. From high-volume batch loading to data services for service-oriented architecture, ODI serves the full range of data integration needs. Additionally, it has built-in connections with Oracle GoldenGate and Oracle Warehouse Builder and facilitates the execution of parallel tasks for faster data processing.

The Oracle Enterprise Manager increases tool-level visibility while monitoring ODI and other Oracle solutions.

Fivetran

Price: 60$/month for Standard Select plan; 120$/month for Starter plan; 180$/month for Standard plan; $240/month for Enterprise plan

Type: Enterprise

A framework for data integration called SAS Data Management was developed to link to data in the cloud, legacy systems, and data lakes, among other places. These linkages give users a comprehensive understanding of the company’s operational procedures. The technology streamlines processes by reusing data management rules and enabling non-IT stakeholders to access and examine data on the platform.

To create eye-catching visualizations, SAS Data Management integrates with third-party data modeling tools, is adaptable, and works in several computer systems and databases.

SAS Data Management

Price: Pricing available on request

Type: Enterprise

A framework for data integration called SAS Data Management was developed to link to data in the cloud, legacy systems, and data lakes, among other places. These linkages give users a comprehensive understanding of the company’s operational procedures. The technology streamlines processes by reusing data management rules and enabling non-IT stakeholders to access and examine data on the platform.

To create eye-catching visualizations, SAS Data Management integrates with third-party data modeling tools, is adaptable, and works in several computer systems and databases.

Talend Open Studio

Price: Free

Type: Open Source

A free and open-source program called Talend Open Studio is used to construct data pipelines quickly. Through the drag-and-drop GUI of Open Studio, data components can be connected to perform jobs from Excel, Dropbox, Oracle, Salesforce, Microsoft Dynamics, and other data sources. With its built-in connectors, Talend Open Studio may access data from various platforms, including relational database management systems, software-as-a-service platforms, and packaged applications.

Pentaho Data Integration

Price: Pricing available on request

Type: Open Source

To collect, purify, and store data in a uniform and consistent format, Pentaho Data Integration (PDI) controls data integration procedures. The application also distributes this data to users for analysis and helps IoT technologies’ data access for machine learning.

The Spoon desktop client is another tool PDI provides for creating transformations, planning jobs, and manually starting processing processes as needed.

Singer

Price: Free

Type: Open Source

Singer is an open-source scripting solution to improve data transfer between an organization’s applications and storage. Singer establishes the connection between data extraction and data loading scripts, enabling data to be loaded from any source and extracted from any location. The use of JSON allows the scripts to handle rich data types, enforce data structures through JSON Schema, and be available in any programming language.

Hadoop

Price: Free

Type: Open Source

The Apache Hadoop software library is a framework made to assist in processing massive data sets by dividing the computational load among computer clusters. The library is built to provide high availability while utilizing the processing power of numerous machines. It is intended to detect and address errors at the application rather than the hardware layer. The framework additionally enables job scheduling and cluster resource management via the Hadoop YARN module.

Data do

Price: Free with paid plans available

Type: Cloud

Technical and non-technical users can flexibly integrate data using the cloud-based ETL tool Dataddo, which requires no coding. It can be smoothly integrated into existing technology architecture and offers a broad choice of connections, completely customized metrics, and a central system for managing all data pipelines simultaneously.

As soon as an account is created, users may start deploying pipelines, and the Dataddo staff manages all API modifications, so pipelines don’t need to be maintained. On-demand, additional connectors can be added in ten business days. SOC2, ISO 27001, and GDPR compliance are all met by the platform.

AWS Glue

Price: Free with paid plans available

Type: Cloud

To enable both technical and non-technical business users, AWS Glue is a cloud-based data integration tool that supports visual and code-based clients. AWS Glue Data Catalog for locating data across the enterprise and AWS Glue Studio for visually creating, running, and updating ETL pipelines are just two of the features that the serverless platform offers to perform further tasks.

For more direct data connections, AWS Glue also allows custom SQL queries.

Azure Data Factory

Price: Free trial with paid plans available

Type: Cloud

A serverless data integration solution that expands to match compute demands is called Azure Data Factory, and it is based on a pay-as-you-go approach. The service may retrieve data from more than 90 built-in connections, which provides no-code and code-based interfaces.

Azure Data Factory also works together with Azure Synapse Analytics to offer sophisticated data analysis and visualization. The platform also supports DevOps teams’ continuous integration/deployment workflows and Git for version control.

Google Cloud Dataflow

Price: Free trial with paid plans available

Type: Cloud

To maximize computational resources and automate resource management, Google Cloud Dataflow is a fully managed data processing service. The service’s primary goal is to lower processing costs by flexible scheduling and automatically scaling resources to fit demand. As the data is transformed, Google Cloud Dataflow also provides AI capabilities to power predictive analysis and real-time anomaly detection.

Stitch

Price: Free trial with paid plans available

Type: Cloud

A data integration solution called Stitch can source data from more than 130 platforms, services, and apps. Without the need for human coding, the solution consolidates this data in a data warehouse. Since Stitch is open source, development teams are free to add new features and sources as needed. Stitch also places a strong emphasis on compliance by giving users the ability to control and govern data to meet both internal and external regulations.

Informatica PowerCenter

Price: Free trial with paid plans available

Type: Enterprise

Informatica PowerCenter is a metadata-driven platform emphasizing optimizing data pipelines and enhancing business and IT team cooperation. PowerCenter decodes complex data types like JSON, XML, PDF, and Internet of Things machine data. It then automatically validates transformed data to uphold predetermined standards.

The platform delivers high availability, efficient performance, and pre-built transformations for user-friendliness as well as the capacity to grow to meet compute demand.

Skyvia

Price: Starts free; $15/month for the Basic plan; $79/month for the Standard plan; $399/month for Professional Plan

Type: Opensource

Skyvia creates a fully configurable data sync. Including custom fields and objects, you can choose exactly what you want to extract. Skyvia uses automatically created primary keys, so there’s no need to alter the format of your data.

Users of Skyvia can also replicate cloud data, export data to CSV for sharing, and import data into cloud apps and databases.

You can also view and read our other article related to data science, big data, and their implementation here: